TL;DR

Reducing lots of HTTP requests into fewer requests is a great idea to improve front-end performance.

However, the obsession with blindly reducing down to one file isn’t healthy. Having a few files (instead of many or one) will tend to give better performance, because it will allow better cache-length, more fine-grained cache-retention, and faster parallel loading.

I’m firing off this post because of stuff I’m seeing circulate on twitter at the moment, but I may come back and revisit this topic in greater detail at a later time. Here’s what sparked today’s rant:

“Once IE6 and IE7 go away for good, there really shouldn’t be the need to use CSS sprites (…) at all.”nczonline.net/blog/2010/07/0…by @slicknet

— David Bruant (@DavidBruant) May 6, 2013

The suggestion advocated in the linked blog post (which is admittedly almost 3 years old!) by @slicknet is essentially that the process of using CSS sprites is considered a “not-so-good practice” and that we should instead be using image data URIs embedded directly into our CSS files.

The premise for this technique, as well as for the ever popular suggestion that you should combine all your JS into a single file, comes from the original rule in the “big 14″ (now much more expanded, to 35+) performance rules that Steve Souders codified while he was at Yahoo.

The “rule” in question is Minimize HTTP Requests, and the claim is, “This is the most important guideline for improving performance for first time visitors.” So, that makes it sound like it’s pretty darn important, and thus almost all front-end developers have adopted this rule into their default mindset. It’s an almost “universal truth” in webdev that for production deployments, all files need to be concatenated into one.

Now, that’s not technically true, unless you’re the Google home page, which inlines literally everything. Instead, it’s usually said that we should combine as many files as possible into as few files as possible, and in practice this works out that we combine all CSS into one file, and all JS into one file, and all our images into one file. Or, at least, that’s the holy grail of front-end performance.

The rule states:

80% of the end-user response time is spent on the front-end. Most of this time is tied up in downloading all the components in the page: images, stylesheets, scripts, Flash, etc. Reducing the number of components in turn reduces the number of HTTP requests required to render the page. This is the key to faster pages.

…

Combined files are a way to reduce the number of HTTP requests by combining all scripts into a single script, and similarly combining all CSS into a single stylesheet. Combining files is more challenging when the scripts and stylesheets vary from page to page, but making this part of your release process improves response times.

So, here’s my problem with this “rule”. Developers will often read a guideline or rule and, in reducing it to practice, take it to its logical extreme conclusion. So, the rule suggests minimizing (aka, “reducing”) the number of requests, but we as developers interpret that to mean that we need to get down to one (or zero!) requests.

I don’t think this is a healthy mindset. I don’t even think this approach works as a good first pass at the “low hanging fruit”. I think it dangerously and blindly overlooks some very important trade-off balances that mature performance optimization must deal with.

Look Closer

Here’s how I would (and do!) teach that performance rule to front-end engineers. I’m reading between the lines, and combining that with lots of real-world experience, instead of just the black-and-white on the page.

If you have 20+ files (say, JS files) that you’re currently loading on your page, that’s too many. You need to shoot for getting that down to 5 or below, ideally, maybe even 2 or 3. But even if you only get down to 10 files, that’s still a 100% improvement over where you were.

You see, this rule should be stressing reducing HTTP requests, not getting HTTP requests to the bare minimum possible at all costs. I don’t know which one was Steve’s original intent, but I can tell you unequivocally, my mountain of experience in this area tells me the former is more effective and more mature than the latter.

Why 3 instead of 1?

So, if moving from 20 down to 3 is great, why isn’t going all the way down to 1 even better?

Let me address that question generically (that is, for any/all of JS, CSS and images front-end resources) first, and then I’ll come back and address some resource-specific concerns.

- Caching (cache-length and cache-retention)

- Parallel Loading (loading bytes in parallel can be faster)

Caching

The biggest concern I have with blindly combining as many files into as few files is that it completely obviates the very powerful feature inherent to the way browsers work: caching.

No, I don’t mean that caching can’t work on a single file. I mean that caching can only work on the single file.

WAT?

The entire file behaves the same way with respect to caching, particularly cache length. You can’t tell parts of a file to be cached for one length of time, and other parts of the file to be cached for a different length of time.

Stop for a moment and think about the front-end resources on your site. Is every single JS source file on your site exactly the same in terms of its volatility? That is, when you make any change to one character in one of your JS files, do you also change at least one character in every other JS file, such that they all need to be re-downloaded?

Chances are, the answer is no. And chances are, the answer would similarly be no for CSS and even probably for images, too.

What does this mean? It means that the mid-term (and long-term) performance of your site is going to suffer if you blindly combine volatile (frequently changing) resources together with stable (infrequently changing) resources.

Every time you change one character in your site’s UX code file, you’re going to force the re-download not only of that file’s bytes, but also all the stable unchanged bytes from all your 3rd-party libraries you included, like jQuery, etc. In many cases, that can be hundreds of KBs of unnecessary re-download when the difference was only a few KB in one small file that you tweaked.

My suggestion: analyze your resources’ volatility, and group your files into 2 or 3 groups, and set different cache-length rules for each. Your volatile (quickly changing) code file needs a shorter cache length (maybe 48 hours?) and your stable code file needs a longer cache length (maybe 1 month?). Note: in practice, I don’t find that cache lengths greater than 1 month really matter that much, because…

There’s also the issue of cache-retention.

Browsers are free to retain, or not retain in our case, resource files for a site, regardless of their expiration lengths. They make these determinations based on a variety of factors, including memory limitations on the device, length of time since the resource was accessed (LRU), and other such things.

Guess what happens when the browser considers for ejection a single larger file with all your JS in it. Intuitively, you may want for it to take into account which parts of the file are stable and not, and more often used, or whatever. What will happen, however, is a single decision to retain or eject that resource from the cache. It cannot split the file up and get rid of only part of it. Talk about throwing the baby out with the bathwater.

If you want for the cache-retention rules to have a chance at ejecting smaller and more frequently updated files while keeping the bigger and more stable files, they have to actually be in separate files. Duh.

Any strategy which combines files ruthlessly and doesn’t consider (and balance) the impact on caching is a failed strategy.

Parallel Loading

One of the best performance features browsers ever gave us was the ability for them to load more than one file at a time. Browsers loading two JS files (from two <script> tags) in parallel was a huge leap forward in performance optimization on the web, without the web authors having to do anything.

The “Minimize HTTP Requests” rule is based on the fundamental idea that a single HTTP request comes with, comparatively speaking, quite a bit of HTTP overhead on top of the actual content of the request. Reducing requests is one sure-fire way to reduce overhead.

However, for that comparatively to actually apply, the resource needs to be of a certain size (or smaller). There is a size at which the content of the request far dwarfs the HTTP overhead. It’s at that size that you could start to say that the penalty of the HTTP overhead is not the only/primary concern.

So, what would be the counter-consideration if not HTTP overhead? Parallel loading, that’s what.

Consider this: what if there was a file size, which we could reasonably determine, which on average took longer to load than if that same file had been broken into two roughly equal-sized chunks and loaded in parallel. How could this be? Because the parallel loading effect was enough to overcome the HTTP overhead of the second request.

Intuitively, such a number must exist. Practically, finding a universal number is nearly impossible.

But I’ve done a bunch of testing with JavaScript file loading over the years. And what I’ve found is that this number, in most of my cases, was around 100-125k. That is, if my single combined file (regardless of how many files of whatever sizes were initially combined) is greater than 125k in size, which on JS-heavy sites is quite easy to do, then attempting the chunk-and-parallel-load has a reasonable chance of improving loading performance.

Notice closely what I’m suggesting: consider, and test, if the technique of concatenation+chunking+parallel gets you faster loads. I’m not saying it always will, and I’m not saying that the 125k number is universal. Only that it’s a rough guide that I’ve found over years of my own usage and testing.

Note: please don’t try to chunk a 10k file in half and load in parallel. That’s almost certainly going result in slower loads. For you to see a good improvement in parallel loading (overcoming the HTTP request overhead), you need to have chunks roughly equal in size, and they should each be at least 50-60k, in my experience.

I’m suggesting that you should do more than just “Minimize HTTP Requests”. You should first minimize, then try out un-minimizing (chunk+parallel load) in a limited fashion, to see if you too can get faster loads.

I’ve been saying this for years, and most of the time when people try it out, they tell me they see some improvement. That’s the best “proof” I can offer.

How to chunk your file(s), if you decide to do so, can follow any number of strategies. I talked above about volatility and cache-expiration lengths as one good strategy. Another one might be to chunk your concatenated file in a couple of different slices for different parts of your site. Try it out and see what happens.

CSS + Data URIs

Back to the original tweet that sparked this post. It was suggested in that blog post, and the tweets which have gone out since, that one effective way to reduce HTTP requests is to take that single CSS sprite image file (note: you’ve already gone from 50 image files down to 1) and do away with it, and instead put image content directly into your CSS file, via data URIs.

So we’re already reducing the potential benefit to being from 1 down to 0, instead of the typical 50-to-1 gains you can easily see. But what are we doing instead?

First, we’re saying that none of our images need to be individually cacheable or parallel-loadable (a decision which was actually already made when we chose spriting, to be fair). But secondly, we’re saying that our images and our CSS can be combined together, with just one cache-length and just one serial file loading (no parallel loading of those bytes).

I think this is a dangerous thing to advocate as an across-the-board strategy. There might be some value in limited situations of moving some of your images into your CSS, especially small icon files. But just blindly moving all your images into CSS makes very little sense to me, and it makes even less sense when it’s suggested that this is an improvement over image spriting.

If you can honestly say that every time you tweak a single property in one of your dozens of CSS source files, that this means that you really do want all your images (and your CSS!) to be re-downloaded, fine. But you are almost certainly in the tiny minority. I think that kind of situation is extremely rare across the broader web.

Side note: some suggest that instead of combining your data URIs into your main stylesheet, you’d have a separate CSS file just for your data URIs, and load those as two separate files.

My question: how is that different from the image sprite technique they were trying to do away with? If I’m using a build tool and/or preprocessor to generate these things, it’s equally easy for that tool to generate an image sprite with associated CSS as it is for it to generate the data URI CSS. That’s a wash. It’s also not any less (in fact, it’s more) of coupling between images and CSS.

JS Loading

A lot of what I’ve talked about so far is in relation to general resource loading. But it turns out it’s especially true specifically for JS file loading.

If your strategy involves concatenating all your many JS files just into one file, and even self-hosting popular CDN’d libraries like jQuery in that file, I think you’re missing out on some potential performance improvements.

But there’s one last thing to mention: dynamic parallel JS loading is not just about loading 2 files instead of 1. It’s also about un-pinning the JS loading from the DOM-ready event blocking that naturally occurs when loading scripts with a <script> tag.

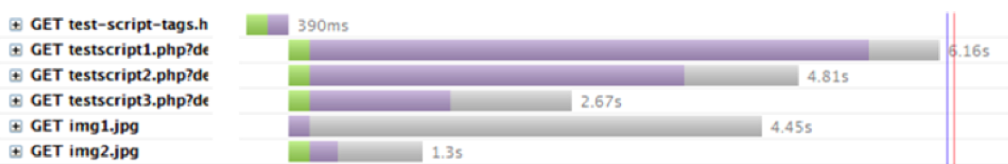

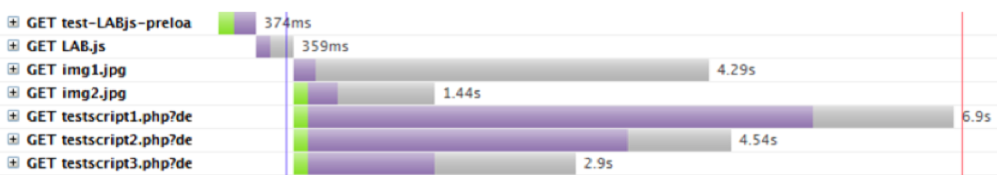

Examine these two screenshots of “waterfall” diagrams with 3 script files and two images loading:

The top image is with <script> tags, and the bottom image is with using a dynamic parallel script loader (like my LABjs loader).

The differences in loading time are actually statistically insignificant (as repeated tests would show). The shape of these diagrams are roughly the same in terms of loading.

The big difference here is the placement of the blue line, which represents the DOM-ready event. The browser has to assume the worst (that document.write() might be present in those files) and thus blocks the DOM-ready event until they’re all done loading and executing. But with dynamic loading, you are expressly not using document.write() (because it will break your page!), and thus the browser can let DOM-ready fire much earlier.

This doesn’t do anything to improve the actual load performance of your page, but it has a huge impact on the perceived performance of the page. The DOM-ready event is the point-in-time when the browser knows enough about the structure of the page to safely let the user start interacting (scrolling, selecting text, etc). It’s also the time when most JS libs fire off events that modify the page.

The faster that DOM-ready fires, the faster your page will feel. So, dynamic parallel script loading also helps your page feel faster, in addition to actually going faster.

That’s All, Folks

So, there ya go, my argument for why just concatenating all your files into one is only part of the story. To really get the best loading performance out of your sites, you should also pay attention to, and maturely balance, cache-length, cache-retention, and parallel-loading.

Hopefully that helps provide a useful sanity check on performance rule #1.